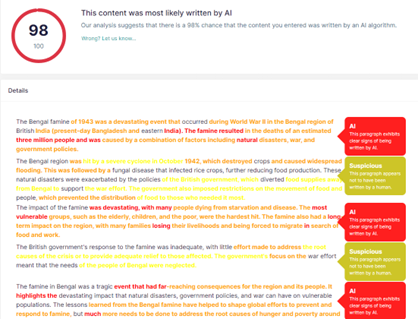

In our last blog, we advised that students invest in an AI Detection software like AI Detector Pro in order to be aware of whether you will be falsely flagged as having cheated. In this blog, we advise you to invest in AI Detector Pro for another reason. AI software is still new, and frankly, it isn’t necessarily going to give you the right answer. Even the media is testing it out and finding mistakes. Finally, AI generated content is sometimes just bad writing. A major reason you want to use AI Detector Pro with risk scores and reports showing you highlighted sections, is that you want to pinpoint the worst writing and weakest analysis in any AI generated content so you can improve it before you turn in the assignment.

Apart from getting the answer flat-out wrong, AI generated content can be very simplistic. You should know what part of the content you have generated will be easily flagged by the detection software, but also what you need to clean up for poor composition and weak analysis. That’s why we say it’s not just about avoiding cheating accusations, it’s also about learning how to analyze beyond AI.

Here’s an example: we generated a response on a lesser known incident – the Bengal Famine of 1943, during which millions of Indians died from starvation. We asked ChatGPT (the latest version no less) “tell me about the Bengal Famine of 1943.” This is the answer it produced:

The team at AIDP grades this answer as a C-. Objectively, it’s just really bad.

First, it’s merely descriptive. It provides a date and the number of people that died. Second, it provides very shallow analysis. For example:

I think we can safely assume that that people die from starvation and disease when famines occur and that the most vulnerable will be the hardest hit.

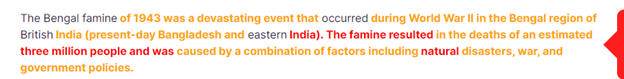

Let’s look at the first paragraph: superficially this looks like it starts off strong, but it’s not great.

Which government managed the area at this time? It was the British colonial government, so why isn’t that fact mentioned explicitly in this sentence? It probably had a lot to do with what happened to the region. Even the natural disaster (the cyclone of 1942), could be integrated into this paragraph more explicitly. This is just inefficient writing. At this point, ChatGPT wasted the entire paragraph after the incredibly exciting opener of “The Bengal Famine.” Even if you’re in middle school we assume that you could put together “The Bengal Famine” without the help of an AI text generator. The paragraph doesn’t even end on a road-mapping sentence for the essay like “we will discuss these in depth in turn, focusing on X.” That’s because the AI doesn’t think. You think and you should be picking something to focus on as a driving factor behind the famine.

This weak start immediately tips off the educator that you are turning in a very basic and descriptive essay. Even without being flagged as having used AI, a professor will start ripping this content apart. We aren’t educators but we just know bad composition when we see it. ChatGPT has already wasted most of the opening paragraph. In turn that makes you will look like you padded this essay because you don’t know enough about the subject OR how to use the academic lens you are expected to apply. You also don’t need to use famine and “devastating event” in the same sentence. We think your professor will safely assume that famines are devastating. This is the AI equivalent of adjusting margins to make the essay fit a required page length. Even if your school doesn’t run your assignments through a detector, you would get a bad grade. With AIDP Pro you could start revising this much more quickly.

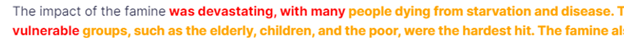

Let’s move on to the grand conclusion that ChatGPT drew up here.

This is also very simplistic. What consequences? What people? Does ChatGPT mean the countries that would later become Bangladesh and India? Bangladeshis and Indians? Why aren’t you using the names of these countries and people? It’s strange and condescending, and not in keeping with today’s standards for how we speak and write about countries and people. What far-reaching consequences and why? What lessons did the Bengal Famine teach and to whom? When did the world apply these lessons and for what event? More needs to be done to address the root causes of hunger and poverty? This is laughable content. Does anyone suggest that we should do less about addressing hunger and poverty? Who would write or say that in 2023? It’s not the type of statement a human would make. That’s our point. ChatGPT is a tool, it’s not a great writer.

Basically ChatGPT, told you the famine occurred in 1943, that it was caused by war, a natural disaster and government policy so we should do more about wars, natural disasters and government policies. At some point the Bengal famine taught us these important lessons and they were applied, but where and when no one knows. That’s like telling a farmer that if his corn crops are damaged by birds, he might want to think about birds as a root cause and do more about birds across his fields!

If you look at the examples we’ve laid out, AI Detector Pro went line by line to show you the weakest part of this AI- generated content, and where you could have fleshed out your analysis, saving you both time and a bad grade. After the first paragraph you could have picked a track focusing on either the colonial government, World War II or the cyclone of 1942. You could have discussed how poverty driven by colonialism rendered this part of the world especially vulnerable to famines, researched where national food stores were being sent, who the Indians and Bangladeshis depended on for food, focused on the geography/climate of this are and its agricultural impacts, and even Winston Churchill’s attitude towards South Asians. AIDP Pro clearly showed you where the bad writing was, and where you could have improved.

ChatGPT is a tool and depending on your school’s policies, you may be able to use it, but don’t rely on it. It will never teach you to think and analyze.

Only you can do that.