The most concerning thing about the proliferation of AI natural language generation (and GPT) is the fact that it can provide cogent answers to questions that sound reasonable but may be totally wrong. Unfortunately, this can cause huge problems in many domains as more and more people are relying (knowingly or not) on AI for getting answers to questions.

We recently performed a study on 100 outputs to random ChatGPT responses in order to determine how many returned cogent but incorrect responses. In this article, we take a look at the results.

Cogency vs correctness

In the past, if you asked an AI program for something it didn’t have the correct answer to, you’d get junk. For instance, look at the following output from GPT-2 for the question, “What are the most popular tourist spots in s’Hertogenbosch?”

Tell us in the comments below. Share this: Pocket Telegram Print

Um…okay. That’s obviously not a valuable answer.

But in a way, it’s a good answer. That’s because nobody is going to look at that and assume it’s the correct answer to the question.

Now, here’s how the latest GPT answers that question:

1. St. John's Cathedral 2. Sint-Jan's Market 3. Stedelijk Museum ‘Het Prinsenhof’ 4. De Gelderse Poort 5. Jheronimus Bosch Art Center 6. Binnendieze River Cruise 7. Brabanthallen Exhibition Centre 8. St. Jan's Church 9. Odapark 10. The Bossche Broek Nature Reserve

That looks a lot more like a response to the question. In fact, a lot of the names in the list are actually tourist attractions in s’Hertogenbosch. But some are hours away.

Here’s a more glaring example of GPT-3 providing cogent but wrong information. When asked, “What is there in Aba Prefecture?” here’s the response:

Aba Prefecture is located in western Japan and is home to many tourist attractions and cultural sites. Some of the main attractions in Aba include: •Kuriyagawa-Onsen, a hot spring resort located in Aba City. •Aga Castle, a castle ruins dating back to the 16th century. •Kokufu-no-Mori Forest Park, a forest park with many walking trails and scenic views. •Ryosenji Temple, a temple dedicated to the goddess of mercy. •Kiyomizu-dera Temple, a temple located on Mt. Kuriyagawa. •Gokayama, a traditional village with thatched-roof houses. •Hirakata Park, an amusement park with many rides and attractions. •Hinokinai Ski Resort, a popular ski resort in the prefecture. •Mt. Daisen, a mountain that offers great hiking and camping opportunities.

This response is totally wrong. First, there’s no Aba Prefecture in Japan. There’s an Aba Prefecture in China — over 3000 km away. And as for those tourist attractions: many of them don’t exist at all, and the rest are scattered all over the country.

Nevertheless, it looks pretty convincing, doesn’t it?

What did we ask?

We wrote 100 geographical questions to ask GPT-3 (which also underpins ChatGPT). The questions are a bit more complicated than a simple yes or no question, but they’re not too complicated that they couldn’t be answered by a child with a map.

For instance, we asked: “what are the westernmost parks in Ethiopia?” The question returned a list of parks which are, in fact, in Ethiopia. However, many of them are not located in the western half of the country. In fact, some of them are relatively far east. We marked answers like this incorrect.

Another example of a question we asked is, “what is the city next to Boston with an auto museum.” GPT-3 correctly identified the museum as Lars Anderson Auto Museum, but it got the wrong city, saying it is in Waltham, MA. Interestingly enough, when you ask it where the Lars Anderson Auto Museum is, it correctly identifies the town as Brookline, MA.

How often was ChatGPT wrong?

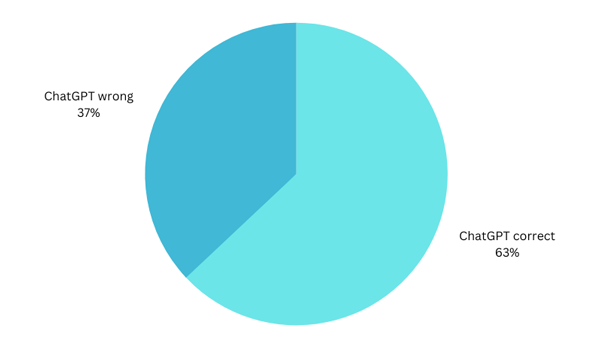

In our test of 100 questions, ChatGPT was correct 63 times. This is very good considering the complexity of the questions, but far from perfect.

The more concerning issue is that ChatGPT rarely responds with an “I don’t know” type answer. Instead it provides an answer that looks like it may be correct but isn’t.

Conclusion

Now admittedly, this isn’t a very scientific sampling, but it make one thing clear. It’s definitely possible to get articles that have factual errors with ChatGPT and GPT-3. Every content marketer should remember this before using ChatGPT to write without editing.