AI researchers have a lot of options for GPUs to run training on; but surprisingly, there aren’t many affordable options that can actually handle the load. Basically, anything made by ATI or Intel won’t run popular AI libraries well — if at all — and the memory requirements for a GPU are so high that most consumer or workstation level Nvidia GPUs are not usable for AI.

Basically, to run any even smaller sized Transformer model in the 2023s, you need over 20 GB of GPU memory. This means that the only consumer level GPUs that can do so are the RTX 3090 and RTX 4090. And only a few workstation level GPUs (the A4500-A6000 range) meet this requirement as well.

What’s interesting is that the A series are at least a generation behind, even though they can have much more memory. For example, an A6000 is more useful for AI work than an RTX 4090 because it has double the RAM, even though the 4090 is faster.

So the big questions are 1) how much faster is an RTX 4090 than an A6000 in AI training tasks, and 2) which one is the better purchase for AI developers?

RTX 4090 vs RTX A6000: speed

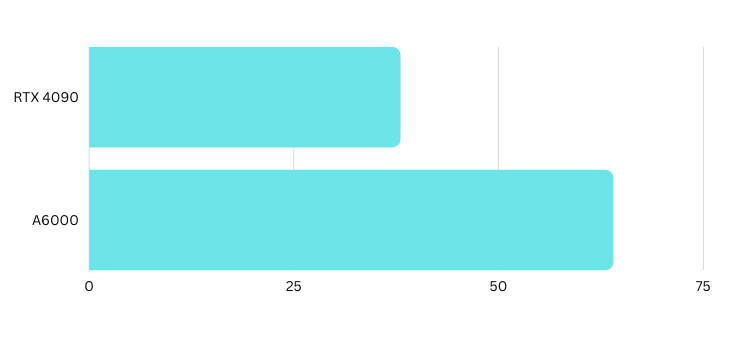

The Nvidia RTX 4090 is at least a generation ahead of the A6000, so it’s faster. But how much faster is it?

We trained a small and medium sized model on both GPUs to find out. Here are the results:

| RTX 4090 | RTX A6000 | |

| Small model with 250,000 training records | 37 min 53 sec | 1 hour 4 min 22 sec |

| Medium sized model with 1M training records | 4 hours 19 min 38 sec | 7 hours 51 mins and 14 sec |

In general, the RTX 4090 could train at about double the speed compared to the RTX A6000. So it’s quite a bit more useful — at least in situations where it can be used.

So should you choose the RTX 4090 or A6000?

The problem with the test above was that it was severely restricted. We couldn’t put any reasonably large model on the RTX 4090 because of the 24 GB memory limit.

This meant that we couldn’t do much of the AI training that we wanted to do, and so the 4090 was essentially useless. Unfortunately, you’ll quickly run into this problem if you’re trying to do AI training on the 4090 or 3090 GPUs.

In the end, we do most of our training and even actual completions on the A6000, since the 4090 can easily run out of memory. Even with models that do fit into the 4090’s 24 GB, allocating one additional tensor for the purpose of running a model can cause the GPU to run out of memory and error out.

Conclusion

RTX 4090 is extremely fast and a capable card, and we would have loved to save ourselves 50% of training time, as well as additional energy costs. Unfortunately, it just isn’t able to handle many of the models you will end up using in deep learning AI.

In terms of AI performance, the RTX A6000 outshines the RTX 4090, making it the preferred choice for cutting-edge AI applications.